Growth Hacking Course

I've spent a good chunk of the past year running AI workshops. Different companies, different industries, wildly different levels of technical sophistication. What's struck me isn't how much varies between them - it's how much stays stubbornly the same.

The same patterns keep emerging. The same mistakes get made. The same gaps appear between what people think they need and what actually shifts the needle.

Here's what I've learned.

1. Task-focused thinking creates blind spots

People aren't exploring AI's strengths and capabilities. They're only asking how AI can help with their existing tasks. On the surface, that seems perfectly logical - why would I bother understanding AI's capabilities? I'm not interested in the technology itself. I just want something that augments what I already do.

The problem is where this mindset leads. People hyper-focus on the obvious, familiar stuff: producing content, using it like a smarter Google. That's their frame of reference. Years of typing queries into search boxes have conditioned them to default there.

Meanwhile, they're not even considering workflows. Not thinking about agentic capabilities - having AI actually execute multi-step processes autonomously. Not exploring data analysis, which is where some of the most immediate value sits for most businesses.

I understand why. If you've never seen what's possible, you can't ask for it. Your imagination gets constrained by your experience. Someone sits down with ChatGPT or Claude and reaches for what they know: write me an email, summarise this document, explain this concept.

None of that is wrong. But there's a massive missed opportunity underneath it. The gap between how people use these tools and what they can actually do remains enormous - and it's not closing as fast as you'd think, even as the models improve.

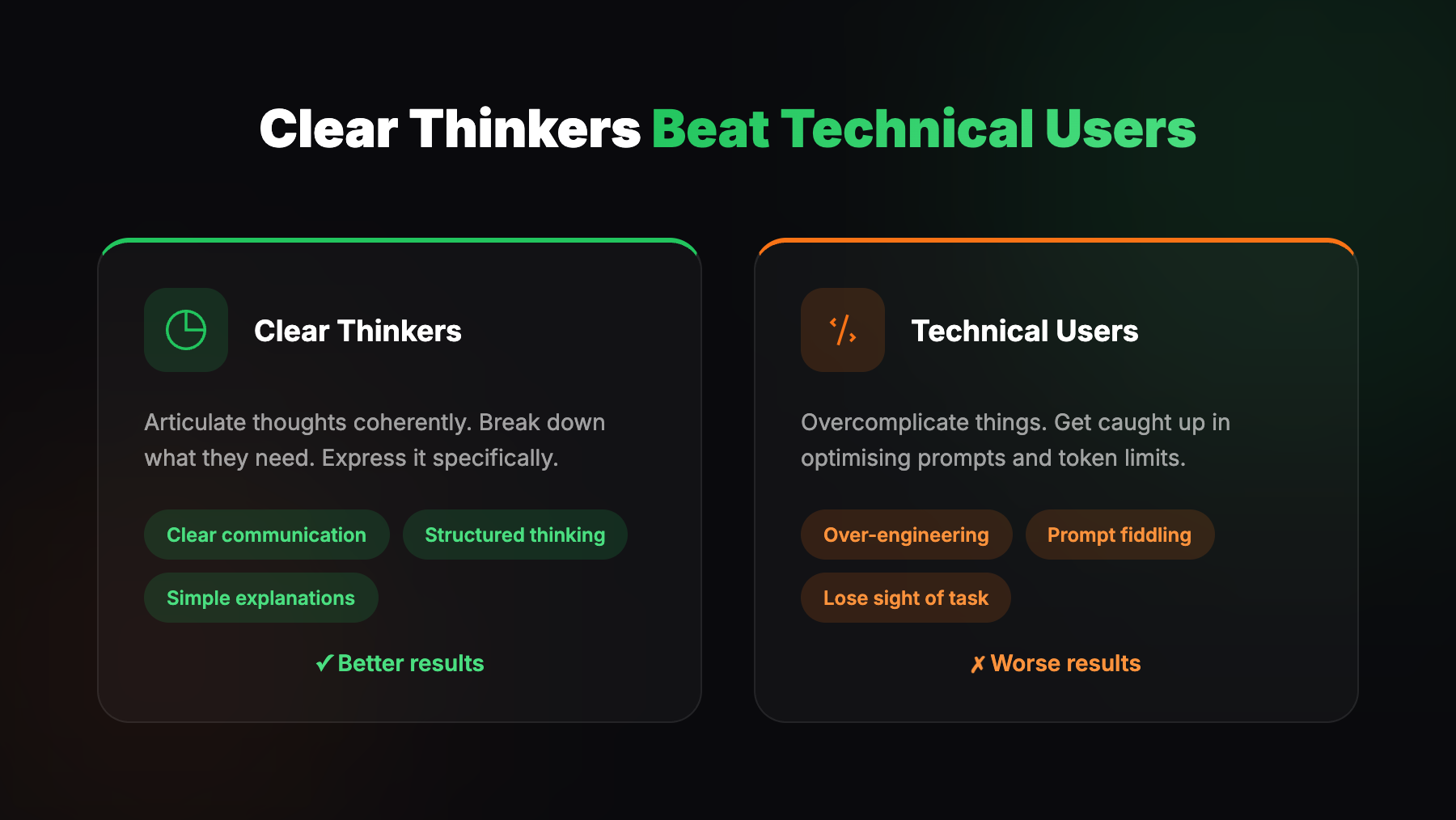

2. Clear thinkers beat technical users

This one surprised me. I'd assumed the technical users - developers, data people, those who understand the technology under the hood - would naturally get better results.

They don't.

The users who get the best results are those who can articulate their thoughts coherently and think through the steps needed to achieve what they want. Clear thinking. Clear communication. The ability to break down what you need and express it specifically and structurally.

The technically inclined often get worse results. They overcomplicate things. They get caught up optimising prompts, understanding token limits, fiddling with temperature settings and system prompts - and lose sight of the basic task: explaining clearly what you want.

This has real implications for AI training. Companies assume they need to upskill the "non-technical" people, bring them up to speed on how the technology works. But some of those people are already better equipped than their technical colleagues. They've spent careers communicating clearly, structuring thoughts, explaining complex things simply. That skill transfers directly.

The technically inclined need different training: how to step back, simplify, and resist the urge to over-engineer.

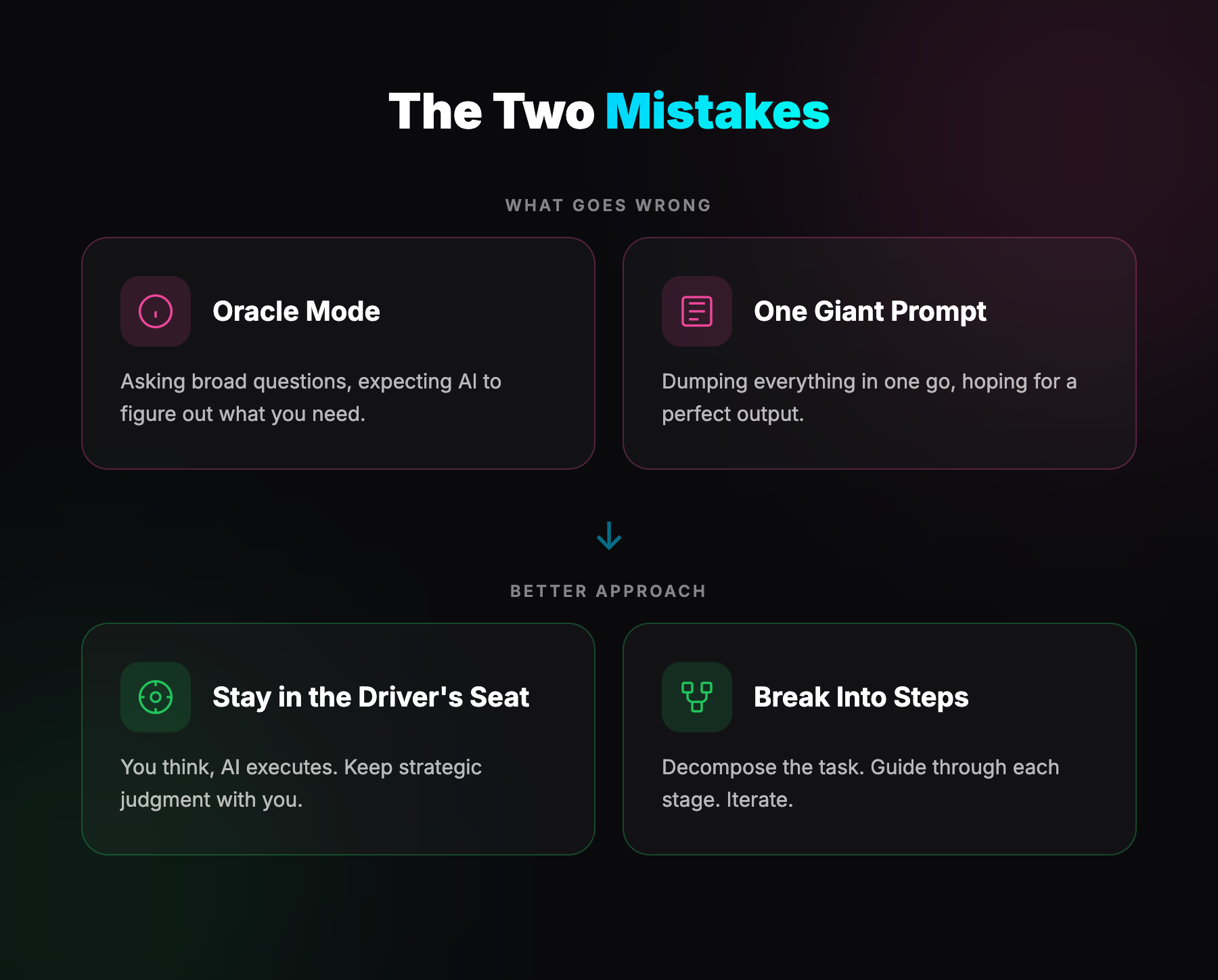

3. The two real mistakes

When I started, I expected the common mistakes to involve structural prompting or wrong mental models for how LLMs work.

Those aren't the problems.

The two biggest mistakes I see - over and over - are more fundamental.

First: getting the LLM to do the thinking for them. People treat it like an oracle. They ask broad, open questions and expect the model to figure out what they need. "What should my marketing strategy be?" "How do I solve this problem?" For anything specialised or context-dependent, this is hazardous. The models aren't there for that kind of reasoning - and even when they improve, outsourcing your thinking entirely is a bad habit. You need to stay in the driver's seat.

Second: not breaking tasks into granular enough steps. People try to get everything done in one prompt. They dump a massive, complex request and expect a perfect output. It doesn't work that way. The magic happens when you decompose the task - step by step, piece by piece - and guide the model through each stage.

Both mistakes share the same root: expecting AI to replace judgment rather than augment it.

4. Theory doesn't land - tangible examples do

I had to completely change how I run these workshops. Initially, I loaded them with theory. Mental models for thinking about AI. Frameworks for understanding capabilities. Conceptual foundations.

It wasn't landing.

What I found is that people only care about three things.

First, seeing the tools available. Not just ChatGPT - the whole landscape. What's out there? What can different tools do? This opens minds and gets people out of the narrow frame they arrived with.

Second, tangible examples of outputs. Real things I've created for myself and clients. Not hypothetical use cases - actual artifacts they can examine and think "oh, that's what it produces." This is where belief shifts. When someone sees a real output that would have taken hours and it took minutes, something clicks.

Third, help mapping their own workflows. Not generic processes - their specific tasks. And crucially, honest guidance on which tools can and can't do what they're attempting. There's enormous hype out there, and people need straight talk about current limits.

Theory has its place. But it's not where you start. Show, don't tell.

5. Leaders who don't practice shouldn't mandate

There's a gap between what managers want and what teams need - more fragmented than it appears.

Some managers just want the team to "use AI." That's the mandate. Use it. Get on board. But they haven't thought through to what end. They're riding the market wave - AI is everywhere, competitors are talking about it, there's pressure to appear innovative. So the instruction comes down: start using these tools.

Here's the thing. Those same managers often aren't practitioners themselves. They're not using AI in any meaningful way. Maybe a few chats in ChatGPT. Maybe they've played with it once or twice. But they're not in there daily, building workflows, testing limits, learning what works.

This creates a gap in expectation and alignment. The team gets told to adopt something leadership doesn't understand. No shared language. No shared experience of what's hard and what's easy, what's useful and what's hype.

My view: leaders need to grasp AI properly before enforcing it on teams. Not expert-level. Practitioner-level. They need enough experience to know what they're asking for. Otherwise the mandate is hollow - and the team knows it.

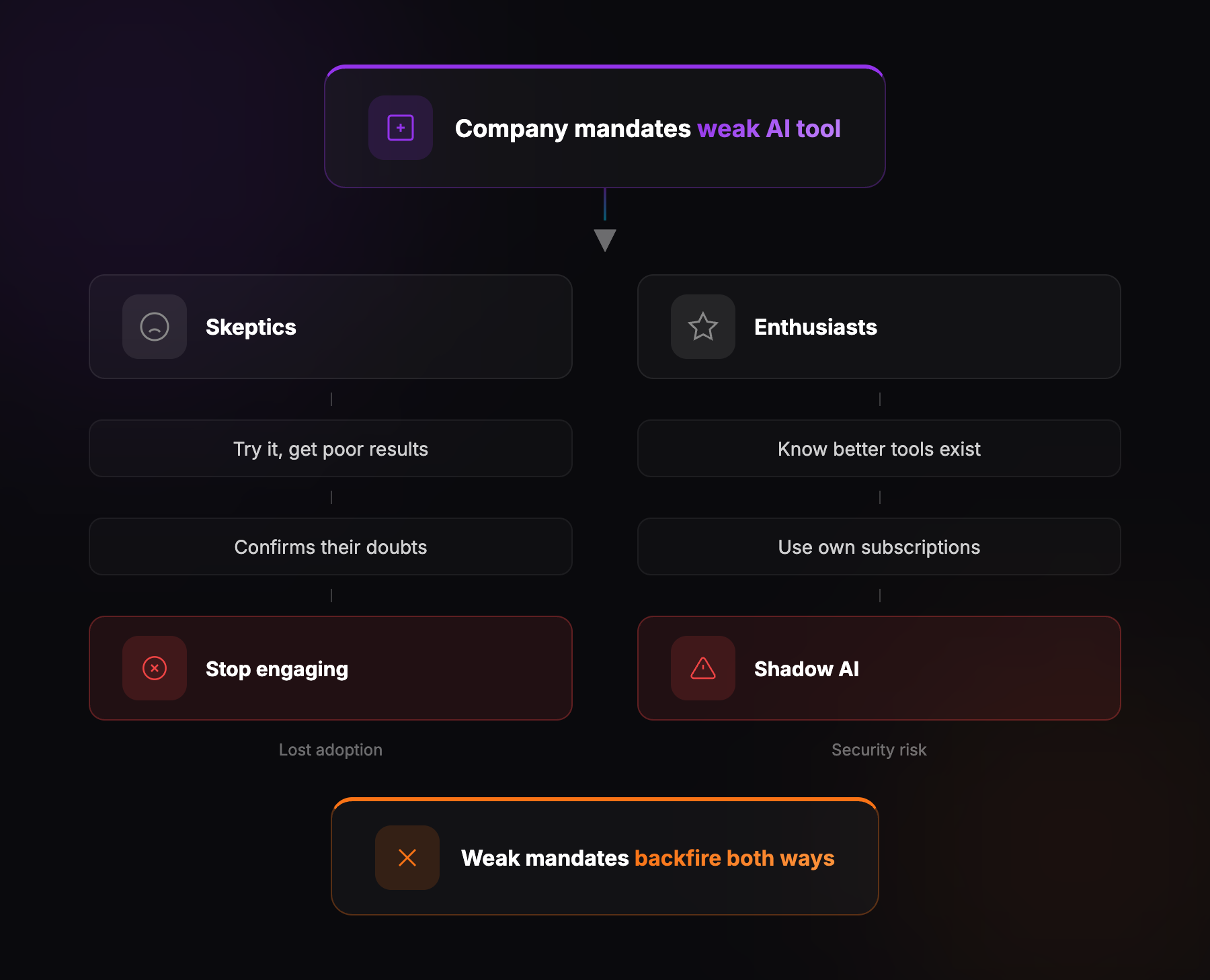

6. Weak tool mandates backfire both ways

One of the most common blockers has nothing to do with skills or mindset. It's the gap between what people want to use and what the company has signed up for.

A lot of companies force Copilot on teams. Copilot isn't useless - but it's the weakest available option. Less capable models. Less impressive outputs. When that's all people have access to, it shapes their perception of what AI can do.

For the already-skeptical, this confirms their doubts. They try it, get mediocre results, conclude the whole thing is overhyped. Appetite to learn disappears. Why invest time mastering something that doesn't seem to work?

But here's where it gets problematic. The enthusiasts - those who've seen what better models can do - don't accept the limitation. They work around it. Pay for their own subscriptions. Use free tiers of other tools. And they start using those tools with company data.

This creates shadow AI. External tools processing sensitive information without oversight. No governance. No idea where data is going.

Weak tool mandates backfire both directions. Kill enthusiasm in one group, create security risks in the other. Neither outcome is what the company intended.

7. Culture infrastructure separates implementers

What actually separates companies that implement from those that don't? Not just enthusiasm. Not just having a few keen individuals. It comes down to real infrastructure.

Is there L&D budget allocated specifically for AI? Not vague "we should do something" - actual money set aside, actual programs running.

Are trainers being brought in? People who can guide teams through the learning curve, answer questions, demonstrate what good looks like.

Is there excitement about creating workflows and prototypes - and critically, a structure for sharing them? Not casual hallway conversations with people you happen to like. Actual forums. Actual processes. A culture where someone builds something useful and it spreads across the organisation.

Without this infrastructure, AI adoption fragments. Everyone figures things out alone - or doesn't. Knowledge stays siloed. Best practices don't spread. You get pockets of capability but no real transformation.

The companies moving forward have made this intentional. They've created conditions for adaptation - not just told people to adapt.

The bottom line

None of this is complicated. But it requires honesty about where companies actually are versus where they think they are.

The technology moves fast. The capability gap is real. Most organisations make it harder on themselves than necessary - wrong tool choices, wrong training approaches, leadership that mandates without understanding.

The ones getting this right aren't necessarily the most technically sophisticated. They're the ones who've thought clearly about what they're trying to achieve, invested properly in helping people learn, and created conditions for that learning to spread.

That's what moves the needle. Everything else is noise.